Senior management is more familiar with AI technologies than its IT and security staff, according to a report of the Cloud Security Alliance commissioned by Google Cloud. The report, released April 3, addressed whether IT and security professionals fear AI will replace their jobs, the benefits and challenges of the rise of generative AI, and more.

Of the IT and security professionals surveyed, 63% believe AI will improve security within their organization. Another 24% are neutral about the impact of AI on security measures, while 12% do not believe AI will improve security within their organization. Of the people surveyed, only a few (12%) predict that AI will replace their jobs.

The survey used to create the report was conducted internationally, with responses from 2,486 IT and security professionals and senior leaders from organizations in the Americas, APAC, and EMEA as of November 2023.

Cybersecurity professionals not in leadership positions are less clear than senior management about potential use cases for AI in cybersecurity, with only 14% of staff (compared to 51% of C-levels) They say they are “very clear.”

“The disconnect between senior management and staff in understanding and implementing AI highlights the need for a strategic, unified approach to successfully integrate this technology,” said Caleb Sima, president of Cloud's AI Security Initiative. Security Alliance, in a press release.

Some questions in the report specified that answers should be related to generative AI, while other questions used the term “AI” broadly.

The AI knowledge gap in security

C-level professionals face top-down pressures that may have led them to be more aware of AI use cases than security professionals.

Many (82%) C-suite professionals say their executive leadership and boards are pushing for AI adoption. However, the report states that this approach could cause implementation problems in the future.

“This may highlight a lack of appreciation of the difficulty and knowledge required to adopt and implement such a unique and disruptive technology (e.g., rapid engineering),” wrote lead author Hillary Baron, senior technical director of research and analytics at Cloud Security Alliance. and a team of collaborators.

There are a few reasons why this knowledge gap could exist:

- Cybersecurity professionals may not be as informed about how AI can impact overall strategy.

- Leaders may underestimate how difficult it could be to implement AI strategies within existing cybersecurity practices.

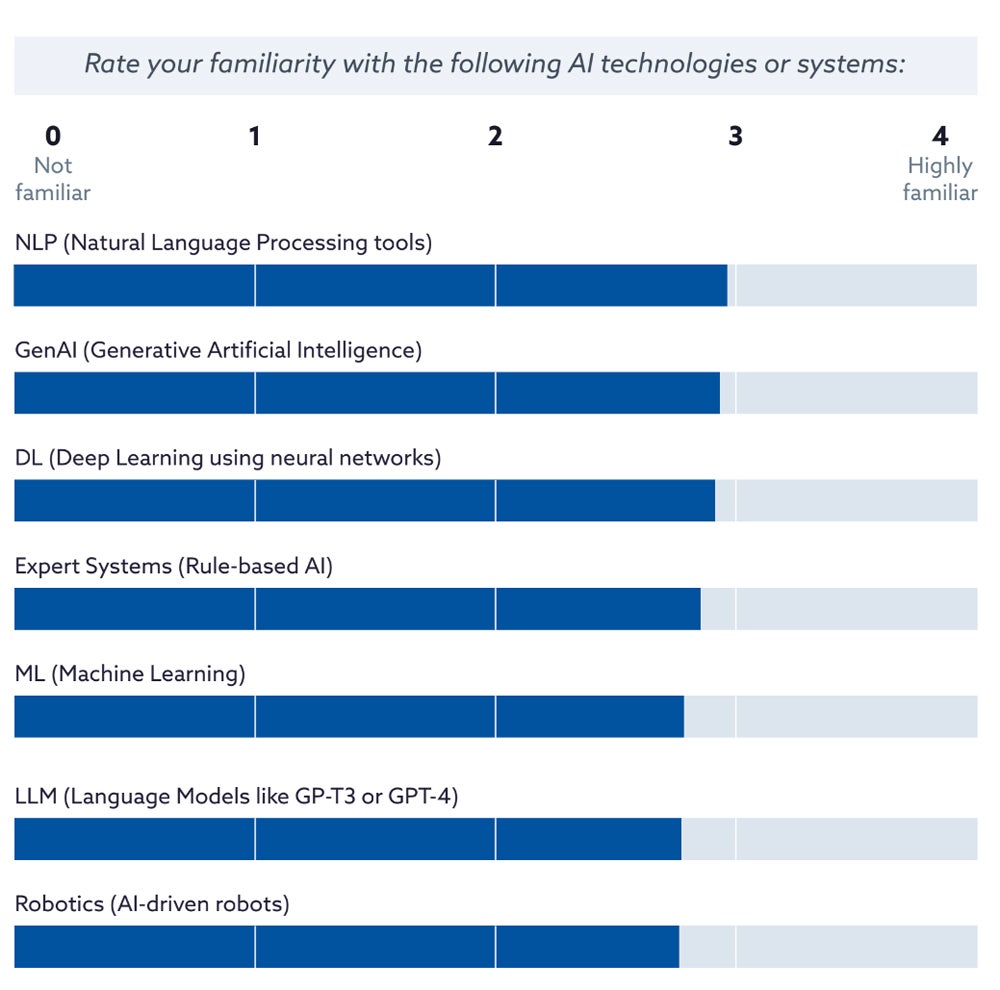

The authors of the report point out that some data (Figure A) indicates that respondents are as familiar with generative AI and large language models as they are with older terms like natural language processing and deep learning.

Figure A

The report's authors note that the prevalence of familiarity with older terms, such as natural language processing and deep learning, could indicate a combination between generative AI and popular tools like ChatGPT.

“It's the difference between being familiar with consumer-level GenAI tools versus professional/enterprise level that is most important in terms of adoption and implementation,” Baron said in an email to TechRepublic. “That's something we're seeing across the board with security professionals at all levels.”

Will AI replace cybersecurity jobs?

A small group (12%) of security professionals believe that AI will completely replace their jobs within the next five years. Others are more optimistic:

- 30% believe that AI will help them improve parts of their skills.

- 28% predict that AI will generally support them in their current role.

- 24% believe that AI will replace much of their role.

- 5% expect AI to not impact their role at all.

Organizations' goals for AI reflect this: 36% look for AI to improve the skills and knowledge of security teams.

The report points out an interesting discrepancy: while improving skills and knowledge is a highly desired outcome, talent is last on the list of challenges. This could mean that immediate tasks, such as identifying threats, take priority in daily operations, while talent is a longer-term concern.

Benefits and challenges of AI in cybersecurity

The group was divided on whether AI would be more beneficial to defenders or attackers:

- 34% consider AI to be most beneficial for security teams.

- 31% consider it equally advantageous for both defenders and attackers.

- 25% see it as more beneficial for attackers.

Professionals concerned about the use of AI in security cite the following reasons:

- Poor data quality leads to unwanted biases and other problems (38%).

- Lack of transparency (36%).

- Skills/experience gaps when it comes to managing complex AI systems (33%).

- Data poisoning (28%).

Hallucinations, privacy, data leakage or loss, accuracy, and misuse were other options for what people might be concerned about; All of these options received less than 25% of the votes in the survey, where respondents were invited to select their top three concerns.

SEE: The UK's National Cyber Security Center found that generative AI can improve attackers' arsenals. (Technological Republic)

More than half (51%) of respondents answered “yes” to the question of whether they are concerned about the potential risks of over-reliance on AI for cybersecurity; another 28% were neutral.

Intended uses of generative AI in cybersecurity

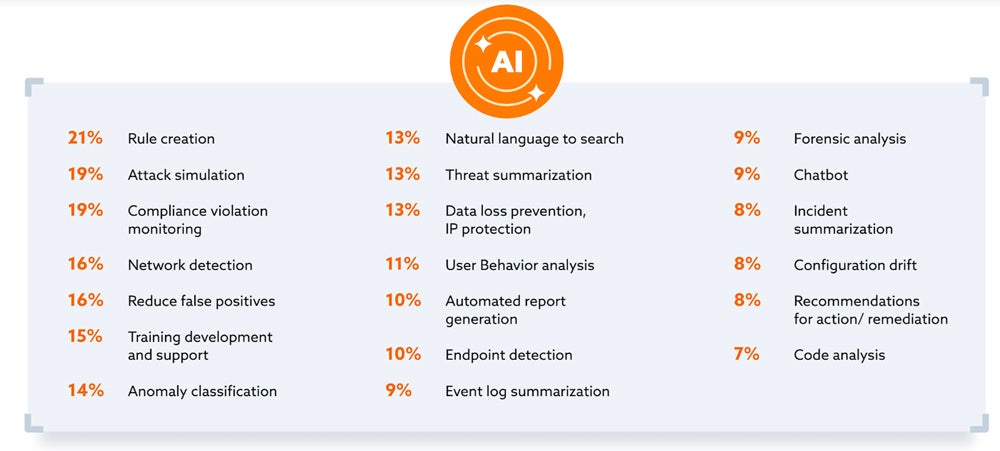

Of the organizations planning to use generative AI for cybersecurity, there are a wide variety of intended uses (Figure B). Common uses include:

- Creation of rules.

- Attack simulation.

- Monitoring compliance violations.

- Network detection.

- Reduce false positives.

Figure B

How organizations are structuring their teams in the age of AI

Of those surveyed, 74% say their organizations plan to create new teams to oversee the safe use of AI in the next five years. The way those teams are structured can vary.

Today, some organizations working on AI implementation put it in the hands of their security team (24%). Other organizations give primary responsibility for AI implementation to the IT department (21%), data science/analytics team (16%), a dedicated AI/ML team (13%), or senior management/leadership (9%). In rarer cases, DevOps (8%), cross-functional teams (6%), or a team that didn't fit into any of the categories (listed as “other” in the 1%) took responsibility.

SEE: Hiring Kit: Fast Engineer (TechRepublic Premium)

“It is clear that AI in cybersecurity is not only transforming existing roles, but also paving the way for new specialized positions,” wrote lead author Hillary Baron and team of collaborators.

What kind of positions? Generative AI governance is a growing subfield, Baron told TechRepublic, as is AI-focused training and upskilling.

“In general, we are also starting to see job postings that include more AI-specific roles, such as rapid engineers, AI security architects, and security engineers,” Baron said.